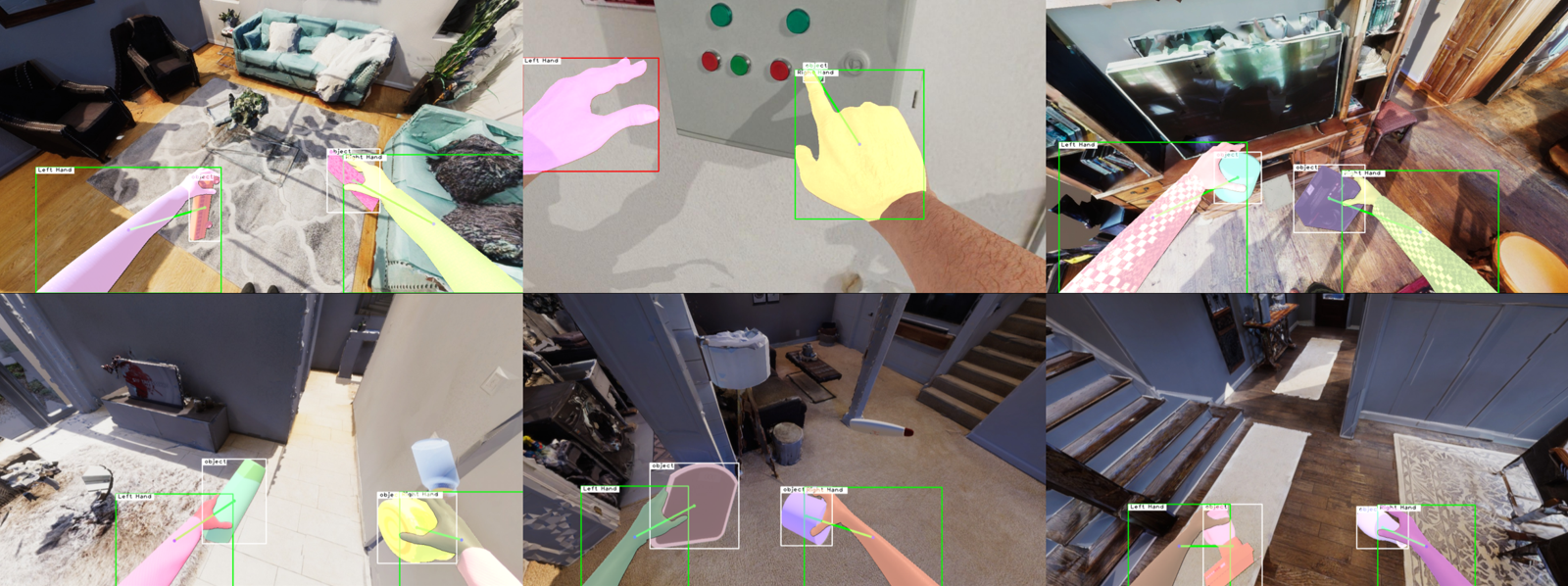

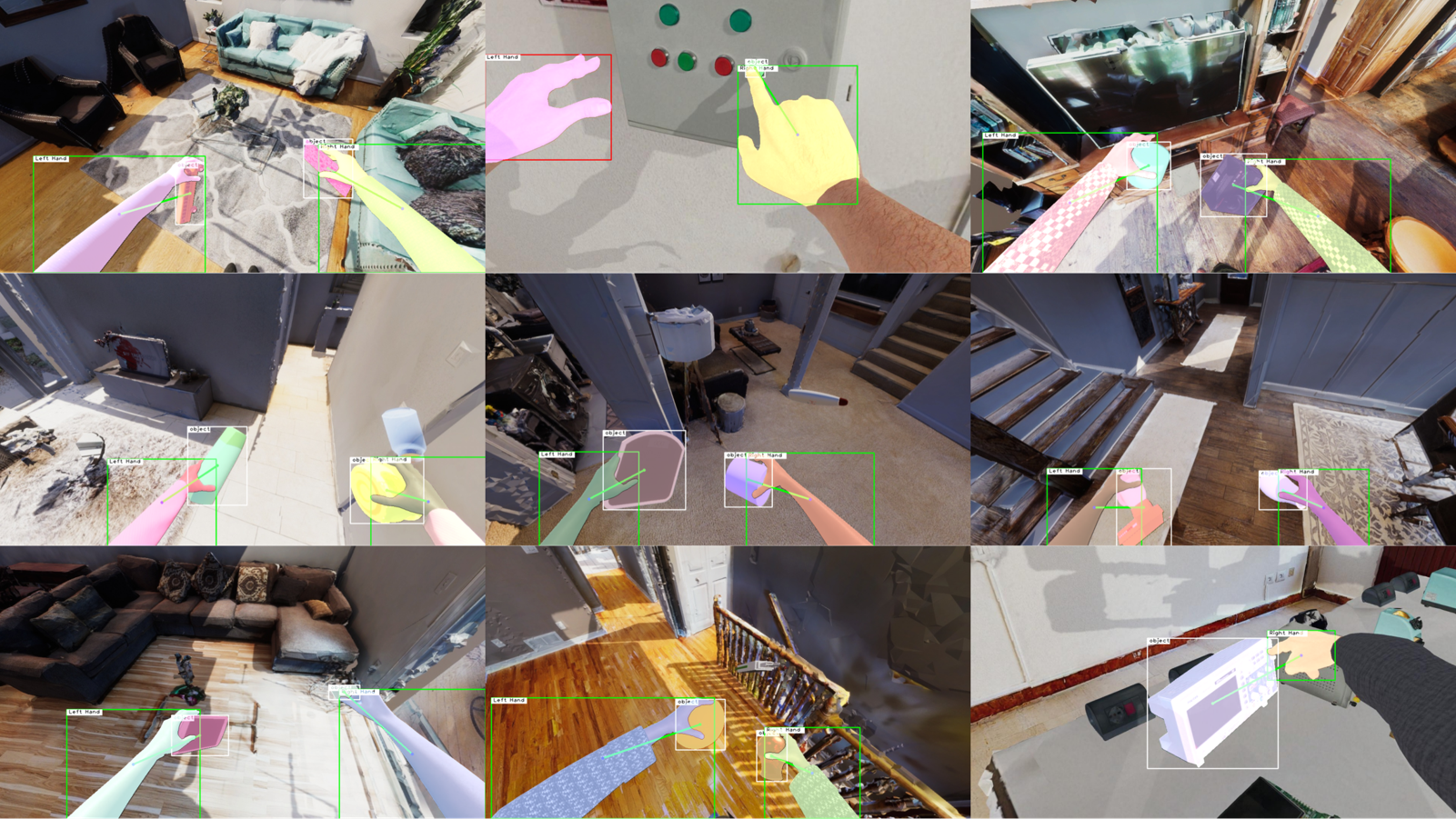

Our pipeline relies on state-of-the-art datasets and components to enable an accurate generation of egocentric images of hand-object interactions. We first select a random hand-object grasp from the DexGraspNet dataset, which is fit to a randomly generated human model and integrated with the appropriate object mesh specified in the hand-object grasp. We then select a random environment from the HM3D dataset and place the human-object model in the environment. We finally place a virtual camera at human eye level to capture the scene from the first-person point of view.

The HOI-Synth benchmark extends three established datasets of egocentric images designed to study hand-object interaction detection, EPIC-KITCHENS VISOR, EgoHOS, and ENIGMA-51, with automatically labeled synthetic data obtained through the proposed HOI generation pipeline.

Leonardi, R., Furnari, A., Ragusa, F., & Farinella, G. M. (2023). Are Synthetic Data Useful for Egocentric Hand-Object Interaction Detection? An Investigation and the HOI-Synth Domain Adaptation Benchmark. arXiv preprint arXiv:2312.02672. Cite our paper: ArXiv.

[01/07/2024] Accepted at European Conference on Computer Vision (ECCV) 2024!

@inproceedings{leonardi2025synthetic,

title={Are Synthetic Data Useful for Egocentric Hand-Object Interaction Detection?},

author={Leonardi, Rosario and Furnari, Antonino and Ragusa, Francesco and Farinella, Giovanni Maria},

booktitle={European Conference on Computer Vision},

pages={36--54},

year={2025},

organization={Springer}

}

Visit our page dedicated to First Person Vision Research for other related publications.